Today I decided to see about installing Portworx on Openshift with the goal of being able to move applications there from my production RKE2 cluster. I previously installed openshift using the Installer provisioned infrastructure (rebuilding this will be a post for another day). It is a basic cluster with 3 control nodes and 3 worker nodes.

Of course, I need to have a workstation with Openshift Client installed to interact with the cluster. I will admit that I am about as dumb as a post when it comes to openshift, but we all have to start somewhere! Log in to the openshift cluster and make sure kubectl works:

oc login --token=****** --server=https://api.oc1.lab.local:6443 kubectl get nodes NAME STATUS ROLES AGE VERSION oc1-g7nvr-master-0 Ready master 17d v1.23.5+3afdacb oc1-g7nvr-master-1 Ready master 17d v1.23.5+3afdacb oc1-g7nvr-master-2 Ready master 17d v1.23.5+3afdacb oc1-g7nvr-worker-27vkp Ready worker 17d v1.23.5+3afdacb oc1-g7nvr-worker-2rt6s Ready worker 17d v1.23.5+3afdacb oc1-g7nvr-worker-cwxdm Ready worker 17d v1.23.5+3afdacb

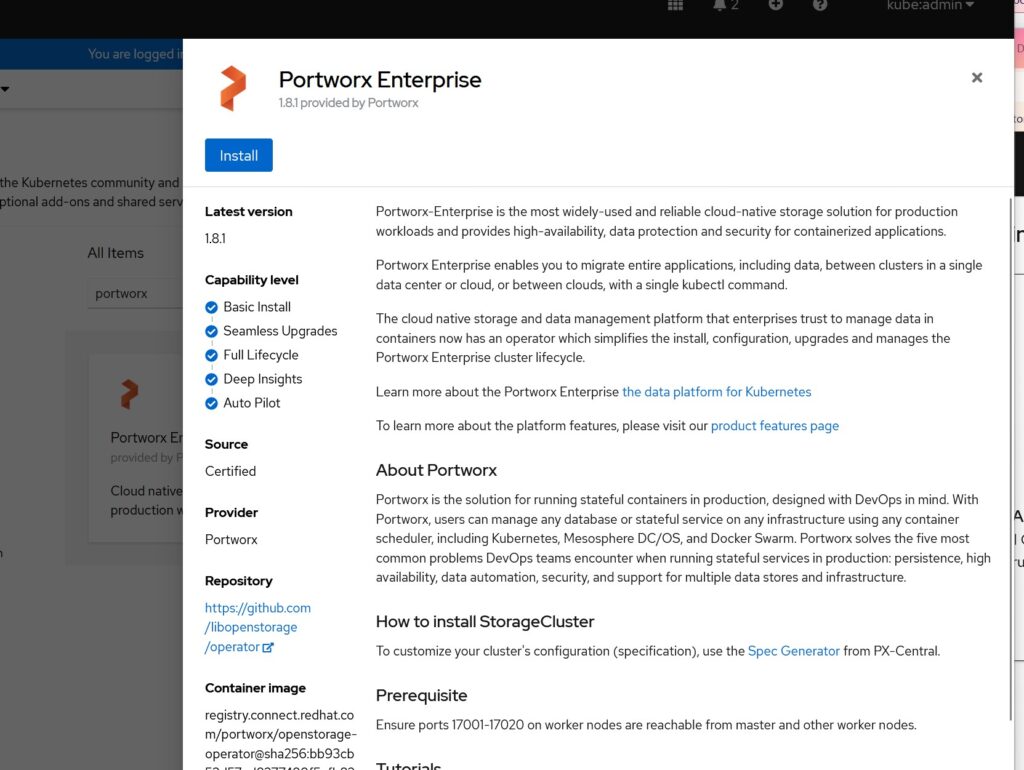

Next, I went over to px central to create a spec. One important note! Unlike installing Portworx on other distros, openshift needs you to install the portworx operator using the Openshift Operator Hub. Being lazy, I used the console:

I was a little curious about the version (v2.11 is the current version of portworx as of this writing). What you are seeing here is the version of the operator that gets installed. This will allow the use of the StorageCluster object. Without installing the operator (and just blindly clicking links in the spec generator) will generate the following when we go to install Portworx:

error: resource mapping not found for name: "px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c" namespace: "kube-system" from "px-operator-install.yaml": no matches for kind "StorageCluster" in version "core.libopenstorage.org/v1"

Again, I chose to let Portworx automatically provision vmdks for this installation (I was less than excited about cracking open the black box of the OpenShift worker nodes).

kubectl apply -f px-vsphere-secret.yaml secret/px-vsphere-secret created kubectl apply -f px-install.yaml storagecluster.core.libopenstorage.org/px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c created

kubectl -n kube-system get pods NAME READY STATUS RESTARTS AGE autopilot-7958599dfc-kw7v6 1/1 Running 0 8m19s portworx-api-6mwpl 1/1 Running 0 8m19s portworx-api-c2r2p 1/1 Running 0 8m19s portworx-api-hm6hr 1/1 Running 0 8m19s portworx-kvdb-4wh62 1/1 Running 0 2m27s portworx-kvdb-922hq 1/1 Running 0 111s portworx-kvdb-r9g2f 1/1 Running 0 2m20s prometheus-px-prometheus-0 2/2 Running 0 7m54s px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c-4h4rr 2/2 Running 0 8m18s px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c-5dxx6 2/2 Running 0 8m18s px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c-szh8m 2/2 Running 0 8m18s px-csi-ext-5f85c7ddfd-j7hfc 4/4 Running 0 8m18s px-csi-ext-5f85c7ddfd-qj58x 4/4 Running 0 8m18s px-csi-ext-5f85c7ddfd-xs6wn 4/4 Running 0 8m18s px-prometheus-operator-67dfbfc467-lz52j 1/1 Running 0 8m19s stork-6d6dcfc98c-7nzh4 1/1 Running 0 8m20s stork-6d6dcfc98c-lqv4c 1/1 Running 0 8m20s stork-6d6dcfc98c-mcjck 1/1 Running 0 8m20s stork-scheduler-55f5ccd6df-5ks6w 1/1 Running 0 8m20s stork-scheduler-55f5ccd6df-6kkqd 1/1 Running 0 8m20s stork-scheduler-55f5ccd6df-vls9l 1/1 Running 0 8m20s

Success!

We can also get the pxctl status. In this case, I would like to run the command directly from the pod, so I will create an alias using the worst bit of bash hacking known to mankind (any help would be appreciated):

alias pxctl="kubectl exec $(kubectl get pods -n kube-system | awk '/px-cluster/ {print $1}' | head -n 1) -n kube-system -- /opt/pwx/bin/pxctl"

pxctl status

Status: PX is operational

Telemetry: Disabled or Unhealthy

Metering: Disabled or Unhealthy

License: Trial (expires in 31 days)

Node ID: f3c9991f-9cdb-43c7-9d39-36aa388c5695

IP: 10.0.1.211

Local Storage Pool: 1 pool

POOL IO_PRIORITY RAID_LEVEL USABLE USED STATUS ZONE REGION

0 HIGH raid0 42 GiB 2.4 GiB Online default default

Local Storage Devices: 1 device

Device Path Media Type Size Last-Scan

0:1 /dev/sdb STORAGE_MEDIUM_MAGNETIC 42 GiB 27 Jul 22 20:25 UTC

total - 42 GiB

Cache Devices:

* No cache devices

Kvdb Device:

Device Path Size

/dev/sdc 32 GiB

* Internal kvdb on this node is using this dedicated kvdb device to store its data.

Cluster Summary

Cluster ID: px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c

Cluster UUID: 73368237-8d36-4c23-ab88-47a3002d13cf

Scheduler: kubernetes

Nodes: 3 node(s) with storage (3 online)

IP ID SchedulerNodeName Auth StorageNode Used Capacity Status StorageStatus Version Kernel OS

10.0.1.211 f3c9991f-9cdb-43c7-9d39-36aa388c5695 oc1-g7nvr-worker-2rt6s Disabled Yes 2.4 GiB 42 GiB Online Up (This node) 2.11.1-3a5f406 4.18.0-305.49.1.el8_4.x86_64 Red Hat Enterprise Linux CoreOS 410.84.202206212304-0 (Ootpa)

10.0.1.210 cfb2be04-9291-4222-8df6-17b308497af8 oc1-g7nvr-worker-cwxdm Disabled Yes 2.4 GiB 42 GiB Online Up 2.11.1-3a5f406 4.18.0-305.49.1.el8_4.x86_64 Red Hat Enterprise Linux CoreOS 410.84.202206212304-0 (Ootpa)

10.0.1.213 5a6d2c8b-a295-4fb2-a831-c90f525011e8 oc1-g7nvr-worker-27vkp Disabled Yes 2.4 GiB 42 GiB Online Up 2.11.1-3a5f406 4.18.0-305.49.1.el8_4.x86_64 Red Hat Enterprise Linux CoreOS 410.84.202206212304-0 (Ootpa)

Global Storage Pool

Total Used : 7.1 GiB

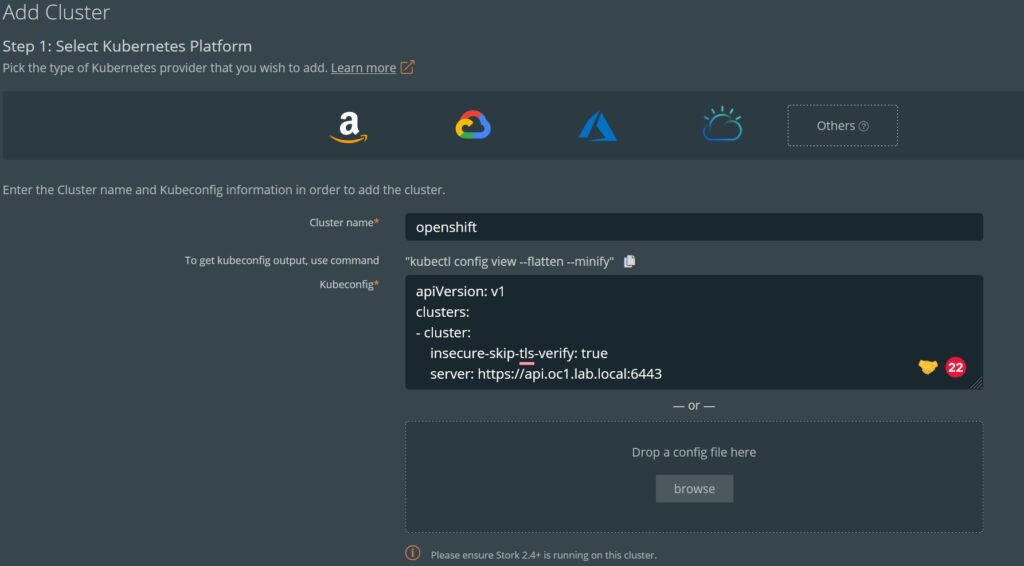

Total Capacity : 126 GiBFor the next bit of housekeeping, I want to get a kubectl config so I can add this cluster in to PX Backup. Because of the black magic when I used the oc command to log in, I’m going to export the kubecfg with:

apiVersion: v1

clusters:

- cluster:

insecure-skip-tls-verify: true

server: https://api.oc1.lab.local:6443

name: api-oc1-lab-local:6443

contexts:

- context:

cluster: api-oc1-lab-local:6443

namespace: default

user: kube:admin/api-oc1-lab-local:6443

name: default/api-oc1-lab-local:6443/kube:admin

current-context: default/api-oc1-lab-local:6443/kube:admin

kind: Config

preferences: {}

users:

- name: kube:admin/api-oc1-lab-local:6443

user:

token: REDACTEDNotice that the token above is redacted, you will need to add your token from the oc when pasting it to PX Backup

And as promised, the spec I used to install:

# SOURCE: https://install.portworx.com/?operator=true&mc=false&kbver=&b=true&kd=type%3Dthin%2Csize%3D32&vsp=true&vc=vcenter.lab.local&vcp=443&ds=esx2-local3&s=%22type%3Dthin%2Csize%3D42%22&c=px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c&osft=true&stork=true&csi=true&mon=true&tel=false&st=k8s&promop=true

kind: StorageCluster

apiVersion: core.libopenstorage.org/v1

metadata:

name: px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c

namespace: kube-system

annotations:

portworx.io/install-source: "https://install.portworx.com/?operator=true&mc=false&kbver=&b=true&kd=type%3Dthin%2Csize%3D32&vsp=true&vc=vcenter.lab.local&vcp=443&ds=esx2-local3&s=%22type%3Dthin%2Csize%3D42%22&c=px-cluster-f51bdd65-f8d1-4782-965f-2f9504024d5c&osft=true&stork=true&csi=true&mon=true&tel=false&st=k8s&promop=true"

portworx.io/is-openshift: "true"

spec:

image: portworx/oci-monitor:2.11.1

imagePullPolicy: Always

kvdb:

internal: true

cloudStorage:

deviceSpecs:

- type=thin,size=42

kvdbDeviceSpec: type=thin,size=32

secretsProvider: k8s

stork:

enabled: true

args:

webhook-controller: "true"

autopilot:

enabled: true

csi:

enabled: true

monitoring:

prometheus:

enabled: true

exportMetrics: true

env:

- name: VSPHERE_INSECURE

value: "true"

- name: VSPHERE_USER

valueFrom:

secretKeyRef:

name: px-vsphere-secret

key: VSPHERE_USER

- name: VSPHERE_PASSWORD

valueFrom:

secretKeyRef:

name: px-vsphere-secret

key: VSPHERE_PASSWORD

- name: VSPHERE_VCENTER

value: "vcenter.lab.local"

- name: VSPHERE_VCENTER_PORT

value: "443"

- name: VSPHERE_DATASTORE_PREFIX

value: "esx2-local4"

- name: VSPHERE_INSTALL_MODE

value: "shared"