There are a couple more concepts I want to cover before turning folks loose on a github repo:

- Instead of a hostpath, we should be using a PVC (persistent volume claim) and PV (persistent volume).

- What if we need to give a pod access to an existing and external dataset?

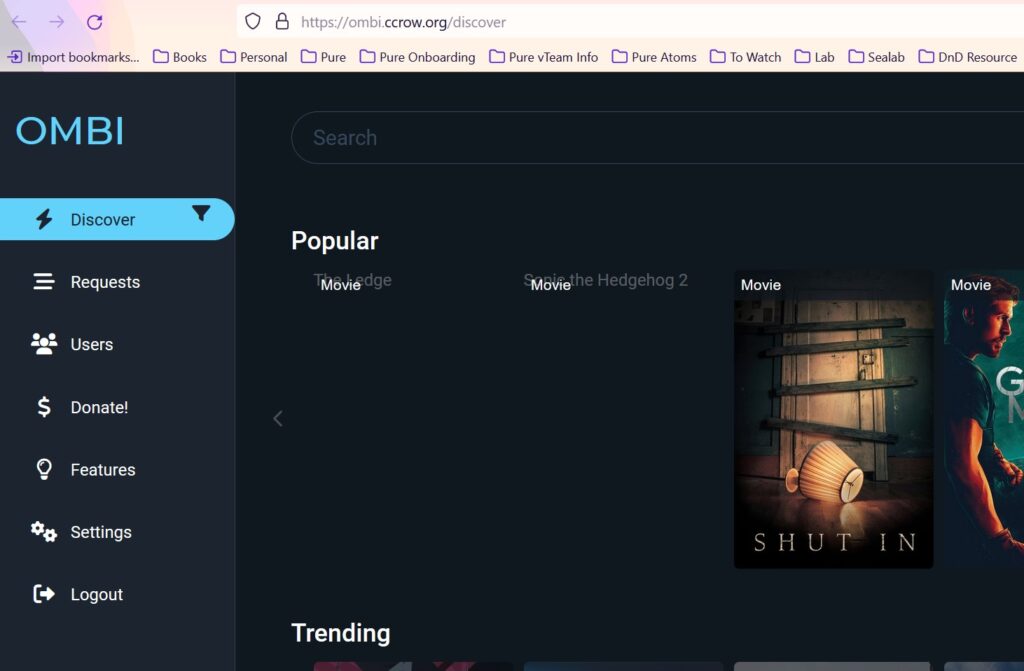

Radarr (https://radarr.video/) is a program that manages movies. It can request them using a download client, and can then rename and move them into a shared movies folder. As such, our pod will need to have access to 2 shared locations:

- A shared downloads folder.

- A shared movies folder.

NFS Configuration

We need to connect to our media repository. This could directly mount the media server, or to a central NAS. In any case, our best bet is to use NFS. I won’t cover setting up the NFS server here (ping me in the comments if you get stuck), but I will mention how to connect to an NFS host.

This bit of code needs to be run from kubernetes node if you happen to use kubectl on a management box. If you have been following these tutorials and using a single linux server, then feel free to ignore this paragraph.

# Install NFS client sudo apt install nfs-common -y # edit /etc/fstab sudo nano /etc/fstab

# /etc/fstab: static file system information. # # Use 'blkid' to print the universally unique identifier for a # device; this may be used with UUID= as a more robust way to name devices # that works even if disks are added and removed. See fstab(5). # # <file system> <mount point> <type> <options> <dump> <pass> # / was on /dev/sysvg/root during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQM11kT2U143DtREAzGzzsoDCYbD2h7Ijke / xfs defaults 0 1 # /boot was on /dev/sda2 during curtin installation /dev/disk/by-uuid/890c138e-badd-487e-9126-4fd11181cf5c /boot xfs defaults 0 1 # /boot/efi was on /dev/sda1 during curtin installation /dev/disk/by-uuid/6A88-778F /boot/efi vfat defaults 0 1 # /home was on /dev/sysvg/home during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQMZoJ5IYUmfVeAlOMYoeVSU3WStycNW6MX /home xfs defaults 0 1 # /opt was on /dev/sysvg/opt during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQM1Vgg9WyNh823YnysItHcwA4kc0PAzrAq /opt xfs defaults 0 1 # /tmp was on /dev/sysvg/tmp during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQMRA3d1jDZr8n9R23N2t4o1yxCyz2hiD3q /tmp xfs defaults 0 1 # /var was on /dev/sysvg/var during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQMnhsacKjBubhXMyv1tK8D3umR3mnzSjbp /var xfs defaults 0 1 # /var/log was on /dev/sysvg/log during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQM1IyfBAleLuw7m0G3UC9KNLrtmVAodTqu /var/log xfs defaults 0 1 # /var/audit was on /dev/sysvg/audit during curtin installation /dev/disk/by-id/dm-uuid-LVM-QkcnzyIuoI6q532Z4OIYgQCxWKfPFEQMsrZUFWfY77xrwFBu3vSgbUfnJIp3AKA6 /var/audit xfs defaults 0 1 /swap.img none swap sw 0 0 #added nfs mounts to the end of the file 10.0.1.8:/volume1/movies /mnt/movies nfs defaults 0 0 10.0.1.8:/volume1/downloads /mnt/downloads nfs defaults 0 0

Lines 29 and 30 were added to the end of the file. Be sure to change the IP address and export path. Go ahead and mount the exports:

mount /mnt/movies mount /mnt/downloads

PVC and Radarr configuration

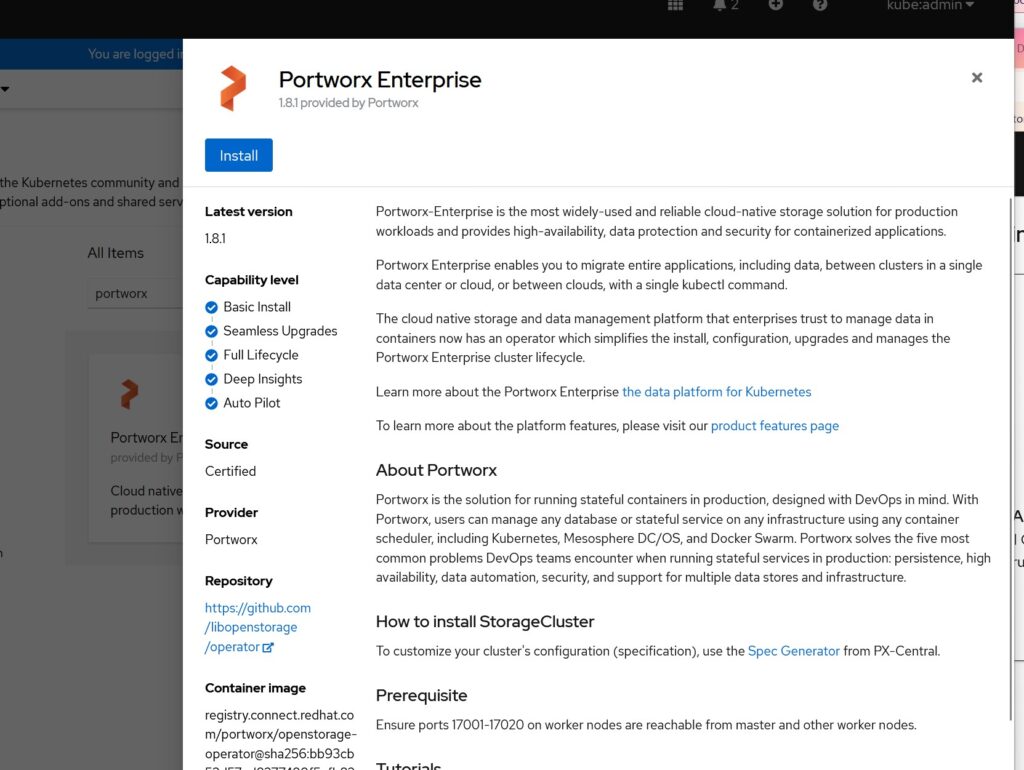

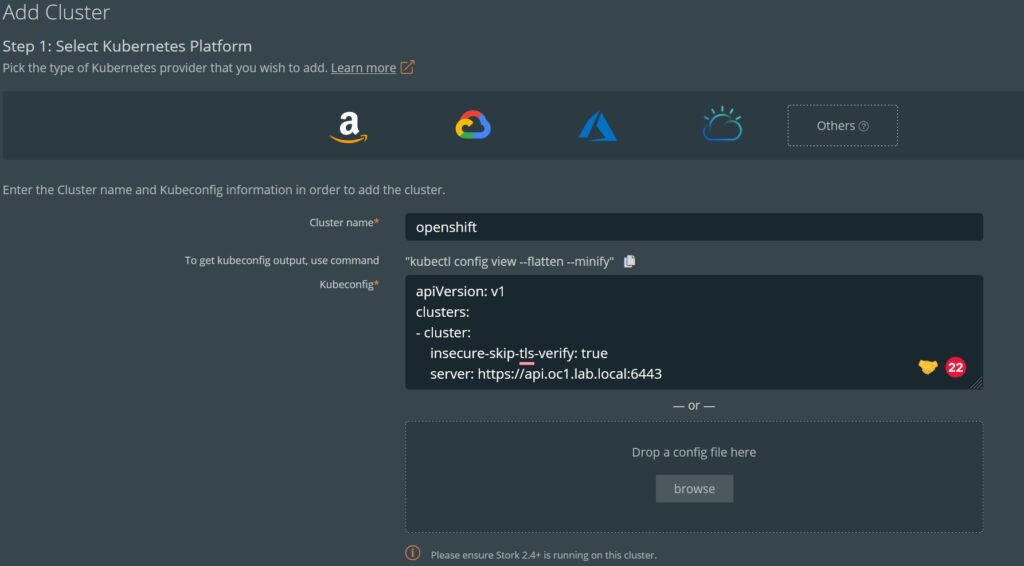

Second, we don’t want to use host path under most circumstances, so we need to get in the habit of using a PVC with a provisioner to manage volumes. This will effectively make our architecture much more portable in the future.

A CSI driver allows automated provisioning of storage. Storage is often external to the kubernetes nodes, and is essential when we have a multi-node cluster. I would encourage everyone to read this article from RedHat. The provisioner we will be using is rather simple: it would create a path on the host and store files there. The outcome is the same, but the difference is how we get there. Go ahead and install the local provisioner:

# Install the provisioner

kubectl apply -f https://raw.githubusercontent.com/rancher/local-path-provisioner/v0.0.24/deploy/local-path-storage.yaml

# Patch the newly created storage class

kubectl patch storageclass local-path -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'Now take a look at this manifest for rancher (as always, a copy of this manifest is out on github: https://github.com/ccrow42/plexstack):

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: radarr-pvc

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Gi

storageClassName: local-path

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: radarr-deployment

labels:

app: radarr

spec:

replicas: 1

selector:

matchLabels:

app: radarr

template:

metadata:

labels:

app: radarr

spec:

containers:

- name: radarr

image: ghcr.io/linuxserver/radarr

env:

- name: PUID

value: "999"

- name: PGID

value: "999"

ports:

- containerPort: 7878

volumeMounts:

- mountPath: /config

name: radarr-config

- mountPath: /downloads

name: radarr-downloads

- mountPath: /movies

name: radarr-movies

volumes:

- name: radarr-config

persistentVolumeClaim:

claimName: radarr-pvc

- name: radarr-downloads

hostPath:

path: /mnt/downloads

- name: radarr-movies

hostPath:

path: /mnt/movies

---

kind: Service

apiVersion: v1

metadata:

name: radarr-service

spec:

selector:

app: radarr

ports:

- protocol: TCP

port: 7878

targetPort: 7878

type: LoadBalancer

---

kind: Ingress

apiVersion: networking.k8s.io/v1

metadata:

name: ingress-radarr

annotations:

cert-manager.io/cluster-issuer: selfsigned-cluster-issuer #use a self-signed cert!

kubernetes.io/ingress.class: nginx

spec:

tls:

- hosts:

- radarr.ccrow.local #using a local DNS entry. Radarr should not be public!

secretName: radarr-tls

rules:

- host: radarr.ccrow.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: radarr-service

port:

number: 8181Go through the above. At a minimum, lines 80 and 83 should be modified. You will also notice that our movies and download directories are under the /mnt folder.

To connect to the service in one of two ways:

- LoadBalancer: run ‘kubectl get svc’ and record the IP address of the radarr-service, then connect with: http://<IPAddress>:7878

- Connect to the host name (provided you have a DNS entry that points to the k8s node)

That’s it!